Wednesday, 14 March 2012 - Jan 2022

|

Time may not be what you think it is, Distance

may not be what you observe, Space may be

something else altogether. Maybe gravity can finally

be understood.

|

Economics and the Natural Sciences

There are parallels between economics and the natural sciences. The law of conservation of energy and the law of conservation of financial resources appear evident in how market forces behave. Market forces represent a closed system in which growth does not take place without being cancelled out by the equal and opposite interaction of demand and supply. This cancelling out inhibits growth and gives rise to either inflation or deflation. This paper will analyse some of the parallels between contemporary economics and observe how similar ideas concerning reality and the foundation of the natural sciences share substantive logical impasses with contemporary economics that may hinder the capacity of human beings to fully understand the nature of reality and how this influences the evolution of the natural sciences. Using a purely theoretical approach this paper will attempt to draw inferences backed by a teleological flow of logic the reality of which are open to debate. Consequently, there is no better place to begin than with demand and supply. Amongst the most renowned scientists in the field of physics is Albert Einstein. In the same way contemporary economics uses Supply and Demand, Einstein’s theory of reality or Relativity Theory uses two basic structures to build an understanding of the Universe, these are, Space and Time. The known Universe can be described as being governed by Space-Time, it is therefore a Space-Time Universe. This concept forms the basic building blocks of Einstein’s model of the Universe and how it works or functions.

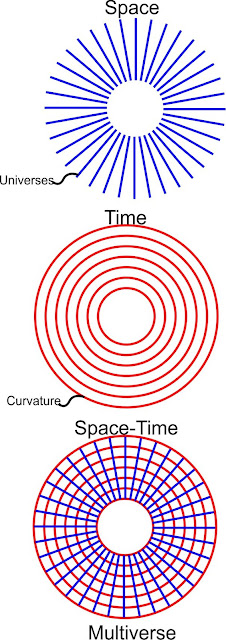

What the diagram demonstrates is that the entire technical structure of conventional physics depends on the interaction of Space and Time without which it appears no logical inferences can be made and upon which nearly all calculations depend to arrive at mathematically accurate descriptions or predictions concerning the nature of the Universe. However, as it is with the expenditure fallacy that hinders the ability to end poverty in contemporary economics there may be fundamental fallacies in this model of the Universe that hinder progress in physics. It is possible at this stage to identify fundamental inaccuracies in the Einstein-ian view or explanation concerning the nature of the Universe. The first critical inaccuracy in this model may stem from how it identifies and describes Space. Einstein may make two fundamental mistakes in the model he uses for his basic understanding of the Universe. Firstly, he assumes that distance and Space are one and the same. For example the measurable distance between a proton and an electron at the quantum level entails that there is “Space” between them. This assumption may be logically inaccurate. “The Space-Time Universe proposed by Einstein could be flawed for ….[the] reason that in his analysis Einstein may not clearly differentiate between “distance” and “Space”. This can lead to a number of inaccurate descriptions about the nature of Space and Time. The concept of distance belongs to a..construct in which ‘Space’ being a vacuum is accommodated or accepted to validate distance or separation between objects [or matter]. However, Space… [may] not be the same as distance... In other words one may talk about the distance between the earth and the moon, however, it would then be incorrect using the same principle to theorise that there is any Space between the earth and the moon..If someone were to say to you, ‘Look I need some Space.’ Technically, it would be completely different from saying, ‘Look, I need some distance.’ To give you distance they could simply move further away from you, to give you Space they could remain right next to you and hand you Space…. Einstein might point at the Sun theorising on how the [Space-Time] continuum functions and say it is in Space, when in fact it is not in Space since this conclusion could not have been made without factoring in distance.”[1] This flaw in Einstein’s model is made glaringly obvious by two facts of his analysis, firstly Einstein groups together Space and Time. Time in his analysis is inseparable from distance; in other words by incorporating Time in his interpretation of Space Einstein automatically computes distance in the workings of his theories, which, as we shall go on to discern, may be a fundamentally flawed method. Secondly, it becomes clear Einstein is aware of these weaknesses in his own model as he ascribes its flaws or what it fails to explain to the existence of the Ether. Einstein seems compelled to accept the existence of an ether where he states, “Newtonian action at a distance is only apparently immediate action at a distance, but in truth is conveyed by a medium permeating space, whether by movements or by elastic deformation of this medium. Thus the endeavour toward a unified view of the nature of forces leads to the hypothesis of an ether. This hypothesis, to be sure, did not at first bring with it any advance in the theory of gravitation or in physics generally, so that it became customary to treat Newton's law of force as an axiom not further reducible.”[2] Einstein further notes the characteristics of the ether “Within matter it takes part in the motion of matter and in empty space it has everywhere a velocity; so that the ether has a definitely assigned velocity throughout the whole of space.”[3] However he further states that, “More careful reflection teaches us, however, that the special theory of relativity does not compel us to deny the ether. We may assume the existence of an ether; only we must give up ascribing a definite state of motion to it, i.e. we must by abstraction take from it the last mechanical characteristic which Lorentz had still left it. We shall see later that this point of view, the conceivability of which shall at once endeavour to make more intelligible by a somewhat halting comparison, is justified by the results of the general theory of relativity.”[4] The fact that Einstein gives up ascribing a state of motion to the ether allows us to conclude that this may entail it has no definitive motion since it does not have distance and consequently is devoid of time and vice versa. Despite this the fact that he still goes on to explain the Theory of Relativity and Special Theory using absolute motion demonstrates that it is a top view theory and therefore though its inferences may be accurate, they will be accurate only to the top view model. It may therefore be concluded that Einstein’s model is in fact inaccurately theorised on Space and on a presumption based on distance. Let us examine this argument diagrammatically.

If we can for now, to catch the teleological flow of this logic accept that Space and distance are not the same this enables us to correct Einstein’s model by replacing Space with distance in the diagram. If distance and Space are not the same then where are Space and the ether? Turning diagram A on its side reveals that the intersection X of distance and Time may in fact still be inaccurate since distance and Time only appear to intersect when viewed from the vantage point of the model upon which Einstein based the logic used to describe how the Universe functions which can be described as the front or "top view". To the contrary when viewed from the side it is found that Einstein’s fundamental model for “Space and Time” are incomplete in that the two may not in reality intersect, they only appear to do so from the top view.

The fundamental model on which modern physics is built depends on the intersection at X in diagram A. For example, in trying to determine how long it would take to travel to the moon one might use distance and durational time (X). Chapman (2010) explains that “Every particle or object in the Universe is described by a "world line" that describes its position in time and space. If two or more world lines intersect, an event or occurrence takes place. The "distance" or "interval" between any two events can be accurately described by means of a combination of space and time, but not by either of these separately. The space-time of four dimensions (three for space and one for time) in which all events in the Universe occur is called the space-time continuum.”[5] This represents, what we saw earlier in the first diagram, that is, Einstein’s model uses distance for Space. Einstein’s view that Space and Time, like demand and supply in Economics, must intersect is a faulty perception based problem. This "intersection theory" is found to be untrue when observed from the side view where Time and Space (distance being Space in his model) do not in fact intersect. Consequently, the world line if improperly applied can be a fundamental misinterpretation based on perception of how laws in physics function; as what is observed is not always what occurs. The workings of Einstein’s space-time continuum and some of the inferences made based on it may be no more than a mirage when observed outside the paradigm that is the top view. As we have shown there may be no actual or fixed intersection between distance and time. The potential inexistence of this connection is capable of reducing the value of distance to 0 or make it a non-existent aspect of the continuum. Space separates, calibrates and predetermines Einstein’s notion of Space (distance) and Time. This can entail for instance that there is in fact no distance between the moon and the earth. If there is no distance as a result of the earth and the moon occupying the same Space then it can be concluded that Einstein’s model also incorrectly labels Time. There is no “durational time” required to cover the distance, since in actuality the distance is 0 (earth and moon occupy the same Space), therefore Time is 0, making the progression of Time in the “Space-Time” continuum inaccurate or simply an illusion created by the model’s top view interpretation of the Universe observed in diagram A. The concept that time does not exist is one that will take a while for the scientific establishment to digest, however, there are ever increasing signs this property may eventually be understood. Folger (2007) reveals, “Efforts to understand time below the Planck scale have led to an exceedingly strange juncture in physics. The problem, in brief, is that time may not exist at the most fundamental level of physical reality. If so, then what is time? And why is it so obviously and tyrannically omnipresent in our own experience? “The meaning of time has become terribly problematic in contemporary physics,” says Simon Saunders, a philosopher of physics at the University of Oxford.”[6]

What is labelled as “Time” in Einstein’s model is in fact a form of chronological decay or motion observed in matter taking place in the absence of Time validated by the fact that in reality the intersection X is governed by the separation Y. Economists may make this same fundamental mistake when they presume the intersection of demand and supply creates an equilibrium that generates economic growth, like Einstein’s model they mistakenly attribute economic growth (distance) to stability in the intersection X when in fact stability and economic growth are not the same, as distance and Space are technically not one and the same.

The Mystery of Growth in Economics and Origin of Gravity in Physics

The same way physics tends to have difficulty pinning down the source or origin of gravity economics has difficulty identifying and understanding the origin of wealth and economic growth. If the answer to these problems where comprehensively known and understood; poverty would not exist and the ability to control and manipulate gravity would be a common aspect of human technological civilization. Both these problems may be perception related. The logical deduction that Time does not exist (Time=0) does not confound mathematical models in physics, what it implies is that a calculation where 10 seconds elapses will mean that motion took place while time stood still, for example, a speed of 100 meters per second entails that the same calculations for “time” is used in the equation, however, analytically time itself should not be considered to have elapsed, 10 seconds for instance becomes 10 cycles or motions; it is a conversion of the idea not a loss of the idea itself. Similarly, the object travelled 100 meters, however, since in Einstein’s corrected model there is no distance covered (distance =0) something is covered, but analytically it is not distance. The conversion of this idea entails it changes from 100 meters to 100 motions. 100 meters per second changes from a spatial concept to 100 motions per cycle (100 motions being equal to 1 cycle) which is a frequency based on the relativity of the movement of objects in relation to one another in the absence of Time and distance; this entails clocks technically measure the absence not the progression of time, however, even this is suspect since from the side view the progression of Time would be considered a primitive human concept, the absence of time being a cornerstone of how the Universe functions. The measurements in physics remain the same but the fundamental properties with which they are associated change, creating a conceptual paradigm shift in how this phenomenon is understood; instead of seeing a limb as an independent force or object we instead attempt to see how the limb is structured and what it consists of and what it is attached to. What we have just analysed is that what is experienced at the intersection X in economics or physics is relative. The distance between the earth and the moon, for example, is governed by laws of physics which in turn are rendered predictable by the properties created by the intersection X; however, the intersection at X is governed by properties of Y. This new model devoid of durational time and distance is more practical since it presumes to use much less energy and resources to create the experiential or top view Universe. Think of it this way, we do not need to expand the size of a laptop’s screen to the extent of the heavens to study the stars, it is impractical to do this as it would use up vast resources, instead we compress the image to fit a 15 inch screen; the Universe may use the same approach. By the earth and the moon occupying the same space and using other properties to define the “distance” between objects the Universe uses less “energy” or effort and operates more efficiently; distance can be maximized without sacrificing “space”. Time is discarded (remains zero or unchanging) to create a continuum allowing motion in matter (which is confused for durational time) to be extrapolated over it. The chronological progression of time as human beings understand it even in its scientific context is no different from markers covered over distance travelled; both this kind of time and distance travelled are a wave form, that is, an illusion or a form of paramnesia required for the human mind to process its own reality. Since there is no distance between objects the idea that gravity, weight or mass is created by action at a distance without a medium and created by acceleration proposed by the genius, Isaac Newton, may also be inaccurate. The apple striking Newton can in fact be interpreted as a front view description of the event that is said to have directed Newton to arrive at a deduction based on a perception formed on a premise induced by the effect of the falling apple. In the same way there is no distance between the earth and the moon, there was no distance between the apple and Newton’s forehead, objects have no actual or substantive volume or mass and therefore no genuine weight. Mass and time are useful for the experiential Universe, but are not practical or efficient to the mechanics of how the Universe is created (that is, the operational Universe) it is not scientifically practical for matter or objects to be of excessive volume or weight and of primitive top view "Space" itself to be of great “distance” or of time to be of a burdensome duration [i.e. there is no past being maintained as a physical reality waiting for a time traveler to come back to]; these will all be inevitably seen as very crude ways of understanding the Universe and the physics that applies to it. The same applies to Time travel. The belief in the ability to go back in time is classical example of what happens when Einstein refers to Time as Motion. When this mistake is made it appears possible to physicists that a time machine can be built to take someone back in time, the maths and means to do this can be shown. However, this is misleading because Time is not Motion, that is, a clock ticking, even an atomic one is not Time, its Motion. Motion is Time in Einstein’s approach to understanding gravity, which is why science has not gained the ability to control gravity to this day. However, I identify True Time (Tt) is in fact similar to absolute zero where there is the absence of Einsteinian Time (Te). Te is in fact Motion not True Time (Tt). Similarly, when Einstein refers to Space (Se - Einsteinian Space), it is not true Space (St) but Geodesics, Geometry or a form of Euclidean Geometry, i.e., the Distance. True Space (St) is the 1s and 0s of information (code) from which Space is fundamentally created as it may pertain to or be understood by quantum computing. Einstein’s Model needs to be corrected to understand this problem, however, for the most part science today does not make this distinction and therefore makes mistakes in interpreting how physical forces work even if the math appears to add up.

Let me try to explain more succinctly why it is practically impossible in physics to go back in Time as Einstein may postulate. To begin with the human physical world takes place in the absence of time. This means the here and now or present is equivalent to Time=0. Time being at absolute zero means there is no past and no future, these states fundamentally do not exist as a "place" you can travel to. The present is the only reality, therefore, to travel to the past or future involves leaving absolute zero, the absence of Time or the present. However, once you step outside of Time (absolute zero) everything experienced is not real, in that it cannot be interacted with, it has no free will, events cannot be changed or altered, it is just a record. This record can be compared to a hologram or more accurately compared to virtual reality (VR). It can be described as a record or recording of the past preserved in Space-Time that cannot be changed, but that can be interacted with in the 1st, 2nd or 3rd person. These records for the persons accessing them are preserved in Space-Time and can therefore be described as a type of hologram or VR that is indistinguishable from reality or the real world. This is like walking through the light of a star. As you walk through the light toward the star you see its future if you turn and walk away from the star you see the light it shines ahead, that is, its past, you are therefore accessing natural, stored historic records. You cannot interact with any of these because they are not in absolute zero or True Time (Tt), that is, they are not in a condition that can be endowed with the present or absolute zero (Tt). To enter the present you have to exit the star's light and step on the star itself, where there is no time. Only then does the here and now present itself, and become interactive in the sense that events can be changed. When Einstein talks about Time (Te), it is not True Time (Tt or Tr) because it is Motion taking place with the mistaken understanding that Time elapses which is simply not possible because if time elapses as he believed, then what is being observed is not based on the present (Tr) and therefore it is a measurement of a recording or something that is not real, but an accurate rendition (record). Therefore, when you talk about going back in time or into the future nothing that is done there can have any bearing on the present because it is outside absolute zero. For instance, when you sit down to watch a movie or a television series the fact that you are seeing it for the first time entails that you do not know the outcome of decisions and choices made by actors on the screen. Therefore, as what you are watching progresses it would appear as though free will is active and the outcomes on the screen are unpredictable. However, what is being observed and experienced is a recording. The same applies to to consciousness. What is lacking is the capacity to discern that what is being observed and experienced is a record. Time shift takes place such that the "captive" mind is incapable of discerning the limitations of its own consciousness. This captive state skews or affects modern scientific observations and knowledge about Time. For instance, Einstein does not make a distinction between Tr and Te, his assumption is that the past and the future are real places you can go to, that is, where you can experience zero time, when in fact this is false, because like a movie recording on a VCR this past and this future can only be changed in Tr or Tt, the true present. However, in the same way a person can learn from their history and use it to alter their decisions in the present, they can see the future and alter their decisions in the present, but once an event occurs it becomes fixed and cannot be altered. In other words, what will happen in the past and will take place in the future can both only be altered in the true present where Time does not elapse. To create a time machine that steps outside of this (the true present/Time not elapsing) anything the physicist encounters as the past or future is no different from a recording or hologram because Motion (Te), or the movement of mass and matter, stops or becomes suspended (becomes a hologram or recording). For instance, going back in time will take a scientist to a record of the past. Since this record was generated using Time it can be viewed as an accurate historic record preserved in Space-Time and accessible in locations during jumps. The record itself cannot be altered. If the scientist interacts with the record or that period in history, any of these interactions will generate virtual reality (VR). Technically, this will be no different from the scientist sitting down to watch a documentary on television, except with events being accurately recounted. The level of conscious immersion will be higher and it can be determined by the scientist's level of self awareness such that it can be on par with VR.

Motion (Te) can only take place in the absence of Time (Tt). Technically one of the few ways a person can possibly discern if the world they experience is a fixed record (Te), rather than the true present (Tr or Tt) is by attempting to measure if a time-shift is in place, for example, through the comparison of two clocks. If the two clocks are not scientifically identical then as real as the world they experience my seem the indication will be that it is VR or like a simulation, which they have not evolved the ability to distinguish from true present (Tr). This deviation in time between clocks can be likened to the "totem" used by Leonardo Dicaprio in Inception. If the totem kept spinning then he knew it was still the dream state .e.g. Te, if the totem stopped spinning and fell he was awake e.g. it was the true present (Tr). Similarly, the ability for separate clocks to either have the same time or not is possibly one of the few means of humanity being able to detect whether it is in recorded VR (Te) or in real time (Tr).

At present human beings experience reality at Se-Te. They do not have the capacity to tell that this is a hologram or recording of sorts, neither do they have the mental ability to discern there is no free will in this state. This attests to the kind of power time shift may have over human consciousness. The only possible means of distinguishing these different states is for a person to experience a shift from Se-Te to St-Tt, only then may it be possible to tell that the previous state was not the present, fairly much like waking from a dream. Proof of this separation of humanity from St-Tt can be proven by testing scientifically for the lack of simultaneity for distant events. The time shift of human conscious reality or existence from St-Tt to the confined and more limited Se-Te may be shocking to science to discover and have empirical evidence for, yet once again religion seems way ahead of science in its knowledge of this fact where it states humanity was banished from Eden, into a safer albeit harsher less enviable existence, the description of which fits a time shift from St-Tt to Se-Te.

The fact that time has a direct affect on how consciousness and perception are processed is likely to make this kind of potentially mind bending disassociation and the ability to determine states in time, what is real and what is not, (like sea sickness when travelling on water or re-learning how to move in zero gravity) and is very likely to be one of the aspects of technologies that tamper with time humanity will have to learn and be trained in how to navigate through. When the technology that allows jumps through Space-Time becomes available, it is very likely vessels or passengers will be required to have specialized time dampeners to precisely control time-shift disassociation before this kind of travel can be used.

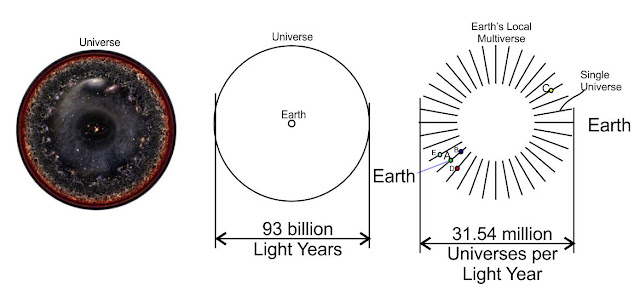

| As you can see in the diagram above there is no past and no future to "time travel" into. There is only the true Present depicted by the blue circle. The blue circle represents the Side View or True Time (Tt) where Time=0 and True Space (St) or code/information/spirit. You exist in the Top View (Einstein's Space (Se) and Time (Te) at B or the "backup") where you experience the chronology of A in a kind of time-delayed-safe-zone in the past that appears to you as the present. The evidence this is not the real present but a history of it is in the fact that the clock at the bottom of a building and the top of a building or GPS clocks on earth and in space have different times, proof that you are not A but B. What you believe is the future, does not exist because it is in fact the real or true present, that is, true Space-Time (St-Tt). To go back in time would instead create a jump to another part of the universe/multiverse (see Julian and Mandelbrot sets or treat the blue and red circles as such) and during the jump you would have access to or see a true record of events or history of those locations in the orange circle of Distance-Motion which is in fact Einstein's Space-Time (Se-Te) and a jump into the future, is not the "future" but an excursion or view toward the true present A. Should anything catastrophic happen to the universe at A, the fail-safe is that it can be recovered or "resurrected" at B to restore A, since anything lost at A, is unrecoverable in this way. This model of true Space-Time (St-Tt) and Einstein's Space-Time (Se-Te) which is in fact "Distance-Motion" where Distance refers to Geodesics or Geometry and Motion is Time are sufficient to give a theoretical physicist a better framework for more accurately formulating and understanding the universe and forces acting within it. |

If a person understands that Tt is different from Te, he or she will know that a jump back in time, will not cause time travel, but will instead create a jump from Te through True Space (St) into a new geographical location in (Se), in the time shifted present, see the section later on Julia sets and Mandelbrot sets. The universe uses the chronology humanity views as the past and future to divide the existence into separate universes to create a multiverse rather than waste this energy preserving a past. Why? Efficiency: the universe will not waste energy and resources preserving records that can be stored with minimal resources as records or soft memory preserved in Space-Time, when it would rather use this Space-Time hardware to create a separate, functional independent and inherently unique universe. Efficiency and prudent use of resources is key to how the universe functions. This is why, should a jump to the past or future be attempted, what is accessed during the jump is the VR or record (soft memory in Space-Time) while what emerges is a new physical location or "hardware" in the geography of Space-Time.

You are a simulation, physics can prove it - TedxSalford by

Astrophysicist, cosmologist and Nobel Prize winner Professor George Smoot

There is actually nothing strange or alarming about Professor Smoot's Tedx lecture, although I don't agree with every part of his talk. As physicists move toward a greater understanding of quantum mechanics and quantum computing, when it comes to what the universe is made of it becomes ever more practical to compare it with code or information, which is Space (St) distinguished from Einstein's Geometric Space (Se). What is presumptuous and disorienting is the belief held by humanity that it created code which is comparable to the belief that the sun and the universe once rotated around the earth. If inorganic substances such as metals are able to communicate with one another at the quantum level (using the "fields do not exist approach") and as a result give rise to the movement of electrons (electricity) and their own physical movement and mobility through this communication, for example, magnets, magnetic particles and other forms of electromotive force (which light itself relies on) then it is not improbable that some of the earliest forms of life and intelligence could have been inorganic. These could very well have naturally evolved in complexity into superconductors, formed rare alloys, rare earth elements or compounds growing like a biological organism that relies on a form of quantum bio-mechanics in place of a biological system found in organic life. Being inorganic and capable of using ever more complex compounds and magnetic fields to move particles and minerals around they could evolve in intelligence and complexity over billions of years, from the very inception of a universe and through various types of fields could cover vast areas of Space. Objects and materials that appear as bland inorganic structures need not be regarded as lifeless simply because they do not show life-signs expected of organic life (this would be like finding the limb of an animal still kicking and because the rest of the carcass cannot be found, assuming that the writhing limb is just an unintelligent, "non-living" thing or force in physics to which a law is ascribed, which is the attitude toward magnets). The need for growth, like the biological need to feed, would lead to ever increasing processing power in these inorganic materials and would naturally create or merge with a quantum realm alongside which or from which organic life emerged. For a scientist to say the universe in its inorganic complexity is not an intelligent living thing, in this context, seems rather naive and somewhat ignorant. Electromagnetic fields and electrical activity associated with the brain, cell and limbic activity are fundamentally more inorganic than they are organic, in the sense that this activity can take place without the need for an organic physiology. There is nothing strange, special or bizarre about this unless a person cannot think outside conventional approaches and cannot at least try to broaden the scope of reason. Many physicists have already transitioned from traditional views that have been overtaken by the advancements being made in an age where information technology is reshaping ideas and scientific concepts. Human beings tend to have a kind of arrogance that emerges from ignorance, regardless of education or the lack of it. For instance, a person can reach out and pick up a glass of water and drink from it, on the other hand a magnet can pick itself up and attach itself to another magnet or metal surface, intelligence, life and purpose is ascribed to one action and not the other. This is a kind of arrogance.

link to video

Neodymium Magnets and Super Ferromagnetic Putty

Neodymium Magnets and Super Ferromagnetic Putty

If a scientist does find a way to build a machine to jump into the past they would find the time in the past they tried to jump into instead turns out to be a geographical location in the multiverse that uses the location in time to instead create a location in space (a Portal or Star-Gate of sorts), if they persist in the pursuit of this technology they are likely to instead also stumble upon how a new universe is created .i.e. a means of generating or unleashing a catastrophic force or generating and controlling tremendous amounts of useful energy. It may also be important to note that the universe will not waste energy and resources creating duplicate universes (so called parallel universes), it will instead use this resource to allow each universe existing in parallel to form and develop independently and uniquely due to the fact that variation and unique traits are naturally more valuable than endless duplicates. However, there may, now and again, arise two parallel universes that are binary or almost identical. It would probably be best to refer to these as binary or twin universes rather than parallel universes because they will be rare, "parallel" as it is currently used tends to imply that nearly identical universes are the norm or are conventional, when in fact not. These natural records, stored in Space-Time, are likely to be considered an invaluable resource, since not not only do they preserve history by time and location, they also offer a VR library of genuine, uncut, life experiences that can be reviewed as anyone pleases, in 1st, 2nd or 3rd person depending on how remote the audience wants to be from objects, actors and events being watched or experienced. These records can have many uses including verifying the truth, accuracy and quality of information which would be useful in many industries and types of work. Its probably worth noting that these VR records of history are likely to be highly detailed being telescopic, macroscopic and microscopic which entails that how the universe came to be, from inception to date, from an astronomical perspective can be reviewed as well as the historic activity at the cellular and atomic level, which would form an invaluable first hand resource for use in scientific research, weighed against previous knowledge that relied heavily on assumptions. These concepts and whether they can be realized can only be verified through the development of technologies capable of shifting time, currently outside the reach of modern science.

The interesting aspect of this though, is that if a physicist did find a way of building a time machine, they simply need to review their math and theory for how the device operates and what it does because in essence what they have done is created a means of generating large amounts of energy, which can be very useful and/or a means of teleportation between locations in the multiverse (without the need for a space craft) which may be a discovery that is also just as significant as time travel. However, during the "time travel jump", which in fact turns out to be teleportation, it will indeed appear as though they are going into the past, but these images the traveler observes are in fact records of the past between the two locations that exist as a kind of by-product of the jump (recording), not the past itself (just like watching the terrain from a car window except that what is observed is recorded history based on the locations being crossed or jumped through). These records which are comprehensive (include location, objects, thoughts and feelings deepening on which person they are accessed in) will be accessed or reviewed in 1st, 2nd or 3rd person in the space in between the jump from one geographical location in the multiverse to another. The universe keeps a time stamp or accurate location based record of everything that has ever occurred in a given location since its inception and physicists can learn to access this accurate history rather than a biased re-telling of history by individuals (the way DNA is used to gain more accurate information on events or crimes) by time travelling, which in this context means observing the past or future outside of the present (outside of Time=0). For instance, if a physicist at a location uses the time machine (which Einstein's physics shows can be built) makes a jump back in time of 24hrs to the very same location, then he or she will stay in the same place and will not go back in time by 24 hours, they will instead be able to observe a record of everything that occurred in that location (where the jump took place) over the 24 hour period during the jump. This is no different from playing back a File, CD, cassette in a VCR or watching streamed video online. A time machine is thus a device for viewing accurate records of history stored in Space-Time (e.g. a jump through time in the same location), it is a means of generating energy that can be used in industry or to power devices and it is also a means of teleportation, depending on how it is operated. This technology would be useful in science for providing empirical evidence on the history of any location in the universe from inception to date and useful in education for showing students first hand what actually took place in history. It would also be useful for determining or reviewing legal cases since the Time-Space historic records can be accessed for an audience to see what actually transpired in a case, the same way the discovery of DNA exonerated innocent people wrongfully incarcerated. By following the design for creating a time machine (which physics shows is possible, especially when re-worked to account for difference between Te and Tt) what has been explained above is what the outcome of the device would be.

The reason why the historic records between locations appear to be a past that a time traveler can create a time machine and jump into is very simply because a scientist is led to believe, by Einstein, that Motion (ticking clocks) is Time, when this is in fact not true Time (Tr). A physicist therefore has to make this correction or adjustment in his or her analysis to arrive at truly accurate or more informed descriptions of how the universe actually works: he or she must make distinctions between Tt and Te as well as St and Se to interpret observations and outcomes in physics accurately, as they are not one and the same. For instance, physicists like to say the measurement of time between a clock at the bottom of a tall building and its top will yield different results. Then what is being measured is not the real world or present (T=0), what is in fact being measured is what the physicist would measure if they had already built the time machine entered it and where observing the past or future, not the present. The "time travel" recording or hologram is evident in the time difference or distortion between the two clocks, which is not real, it is empirical evidence or an example of interacting with a recording or hologram (which is what the time machine would do with much greater depth by going back to access further records in history). However, because Einstein does not differentiate between True Time (Tt) and Motion (Te) they believe the time differentiation between the clocks is real, that is, taking place in the present when they are in fact making an accurate measurement of a recording or hologram (records of history stored in Space-Time), which they mistake for the present because they are making the measurement close to or believing it to be T=0 (the real present). The difference in time between the clocks is empirical evidence that time travel is possible, with the exception being that it will yield true historic records of the past rather than move people back in time. If this where not true the time between the clocks would be exactly the same. In the present (T=0), the clock at the bottom of the building and the top of the building have the exact same time and this unchanging, constant or universal timeline is the present (T=0) and is the same throughout the universe and across the multiverse. What does this mean exactly? If you were in the present (T=0), when as a physicist you measured time using clocks at the bottom of the building and the top of there should have been no time variance between them. The time variance is evidence that everything you are experiencing right now is a record of your past (Te) that the real you, which you mistakenly believe is future you who exists in the real present (T=0) has already done. However, your human consciousness which is actually in the past believes it is doing and experiencing everything (life) in real time, for the first time, right now, on the incorrect assumption that this is the present. Lets say that CNN records the news live (Tt or T=0). It then broadcasts the news with a 6 hour time delay (Te). You the viewer are watching this news or broadcast and reacting to it believing it is live (your current belief about where you are in time at this very moment) when it fact it is a record of what took place 6 hours ago. Everything that you are doing right now, a future you who is actually in the real present has already done. However, your consciousness being unaware of this time shift is processing a record of the past (everything you are seeing and doing right now) incorrectly as the present. In other words your understanding of your own place or existence in Space and Time is primitive because it is not aware of existing in this variance. This raises an important question, which is why?

Firstly, in essence its the realization that you exist in Te as a version of yourself existing in the past, not the real you functioning in the future Tt, that is actually the real present. The answer to why the universe is structured in this way may be quite simple, human beings do the same. Its called risk management. To understand this process you have to differentiate between Einstein's Space, which is Geodesic or Geometry (Se), not True Space (St), which is created from code or information [or spirit to add a religious perspective. Religion seems way ahead of science in this respect, it explains there is more than one kind of death - a physical death (Te) which a person can be spared from and second death (Tr), which is a death of the spirit from there is no recompense or recovery]. Should anything cataclysmic happen to the real you operating in Tt, this code, information or data and knowledge would be permanently lost beyond recovery. Not only would you die a physical death, you would also cease to exist. Therefore, the universe is cradling or protecting the real-time you (Tt) by running what it may consider just as important or more important, that is, a fail safe, off-site backup of you (Te) that exists further back in time without being aware of this discrepancy [which is you right now]. Te can be used to restore or resurrect Tt in the event that a cataclysmic event destroys a valuable part of the universe. If viewed in this way, it makes perfect sense.

Secondly, the version of you, B (functioning at Te) that is actually a backup of the real you A functioning in real or true Time (Tr) is re-living, re-enacting and reacting to what has already occurred, in first person as though it is spontaneous, has free will and happening for the first time, when it is in fact reacting to a pre-existing record flowing through time. The scientific proof of this being the case is observed by Einstein himself in that in the present (Te being experienced by B) simultaneity for separate events does not exist. Why is it important for you - B, to experience Te as though it is taking place in real-time? Once again, the answer to why the universe would function in this way may be very simple, it does this once again for risk management purposes. Technically, although B believes it has, but in fact has no free-will, the fact that it relives the record believing it has free will and is responsible for outcomes allows it to form an unbiased second opinion of events that have already occurred. A becomes immediately aware of this second opinion in real time (Tt) because B is just earlier time shifted A. If B relives the record in Te and forms a different opinion, A will spontaneously become aware of it and make an adjustment in behaviour in real or true time (Tt). This adjustment in behaviour will lead to a new record moving down the timeline that will once again be reviewed by B, unbiased because of being unaware of A, the opinion of which A will be immediately be aware of. In this bending of time, the perpetual loop (which behaves like RAM) that is created may give rise to what scientists today refer to as self-awareness and a conscience. The memory and improved ability to make decisions becomes intelligence. Human intelligence or intelligence in general, in this case, can be described as being created by manipulating time through Te and Tr as a risk management process designed to create self-awareness and improve intelligence through the interaction of A and B, which is one and the same person or organism functioning in different time settings for decisions made to increasingly have better outcomes. These improved outcomes are what accumulate as the record, which is an accessible history preserved in Space-Time (ROM). This seems to be a method for forcibly jump-starting intelligence. What is interesting about this process is the manner in which time is manipulated into behaving like a natural transistor or processor where only the present exists, but by making the system treat or view the present (Tt) as the future and the data being backed up which is past, it naturally creates a pseudo-present (Te) that is in fact the past, and uses the record of events as history, which in combination create Past-Present-Future. The reason why there is a delay between A and B is likely to be due to time it takes to capture, backup and archive A at B, which must have a maximum processing speed and involve vast amounts of data. This natural method of structuring and organizing time and information would be remarkably useful. This analysis may make some people uncomfortable, however, where a hypothesis is formed every stone needs to be turned to increase the depth by which greater clarity on a subject may be gained.

Of course, this same property will apply to gravity. All of the universe's mass, forces and distances that seem tremendous seem so only because they are seen from the top view. The reality is that they in turn are controlled by underlying properties yet to be discovered in physics, occupy just one tiny dimension and are a very small part of something much greater; namely the underlying code by which they are written and operate. Later in this paper an attempt will be made to explain why there may be a scientific basis for this in theoretical physics. In this regard the “increase” in weight or g-force such as that observed when an object is accelerated may in fact not be created by velocity since distance=0 and consequently velocity remains zero; this idea of g–forces would only be a top level “illusion”, that is both quantifiable and measurable from the top view, but that is easiest to manipulate from the side view. Economics is gripped by a similar illusion. It believes fundamentally in scarcity and the definiteness of economic resources, when in fact the volume of economic resources is not determined by what is observed or what appears available, but by the underlying operating system by which those resources are made available.

The Measurement Problem Explained: A Refresh Rate for Matter & Analytics in Economics

Economics faces the same human constraints in logic that are found in physics in that what is observed and interpreted analytically may not be what occurs. For instance it is possible to theorize a scientific basis for the inexistence of time; an attempt shall be made here to explain this view. To make the explanation simpler or clearer let us begin with a very simple approach such as this; a cartoonist or animator drawing a car can flip pages with drawings to show a car travelling at 100km/h, however, in reality the image on each page is standing still and has no velocity; consequently, similarly to begin to understand forces like gravity scientists may have to learn to accept the non-existence of durational time and rationalize this in physics.

Though the car in the video below is moving at 100km/h, from the time its started off and reached its destination it was visible in the same frame, a frame is no different from an entire universe. Since the starting point and the destination are in the same frame, in reality there was no distance between them, neither is there at the smallest point, the quantum level or the greatest, the astronomical level. If there is distance, there is matter to quantify distance, much like a ruler is scaled to measure, and if there is distance and matter, then time must exist to quantify rates of motion; if motion exists then it represents time and Relativity Theory applies: all this leads to a Distance-Time, Matter-Time [Time being Motion] or top view of the universe not a Space-Time view of the universe which is where Einstein errs. If there is no distance, then there is no motion, there is no durational time and matter exists but not as it is understood in the top view; since all matter exists in a single point when observed from the side view, that single point is a single frame or the entire universe. At this stage physics begins to move outside observable phenomenon from existing as matter to existing as information or a type of code.

When this underlying code and how it works is understood, humanity will gain a new threshold in physics and be able to manipulate gravity with the greatest of ease. The image on each page standing still entails it has no velocity and yet the object when observed on refreshed pages appears to move. This condition creates what scientists call the “measurement problem”. To date physics has failed to explain the measurement problem. As I have suggested here, if matter indeed refreshes then this very elegantly and comprehensively explains how it can appear to be a particle and a wave at the same time essentially providing a pragmatic end to this debate in physics. This very simple explanation does not need the concept of a "superposition" of two states when its not being measured, there is no need to attribute active observation or measurement or a state of non-observation that affects the particle which is quite weird, or that only conscious beings affect or are affected by this process, if matter refreshes this problem is solved. If you don't know what the measurement problem you can watch this video.

When frames used to animate an object are examined more closely it will inevitably be found that the object on each frame is standing still yet when the flipped pages are observed less closely or at a distance the object is animated. This phenomenon also represents the problem experienced in quantum physics where matter appears to be able to exist as a particle or a wave. Since we know that fundamentally the image on each page or frame is standing still we are able to infer the movement we observe in science as a “wave form”, velocity or on the screen as motion or movement is in fact an illusion, a form of paramnesia or a kind of “trick” of nature, it is a scientific phenomenon which creates empirically verified wave properties, yet without this “illusion” [Einstein’s interpretation of a “top view of the Universe] matter as human beings understand it and reality as they experience it would not exist. When the “pages are being flipped” and motion appears to take place through an animated object, matter seems to behave as a wave, however, when “moving” matter is examined more closely; on each page it has no velocity, is in fact standing still and therefore appears a particle, explaining the inevitable dilemma of how an object can be moving and standing still at the same time.

Wave particle duality in physics may in fact be a flawed concept, since waves as they are conventionally understood do not exist, but are more likely a top view illusion earlier described as a kind of paramnesia attributed to observation at the top level induced by motion facilitated by the refresh rate of the universe and therefore the refresh rate of matter; this illusion is what is referred to as the experiential Universe or that aspect of the Universe people inhabit on a daily basis. It is brought to life by matter being refreshed thereby endowing it with mobility and free will: since motion is time in Einstein's model, the refresh rate is the origin of both motion and top-view time. The experiential Universe is not an illusion per say, it is a real, flesh and blood world or existence since it has origins in a particle form, however, the fundamental properties upon which it is created rely on the wave form of matter which is technically produced by ephemeral or impermanent processes. In other words even though reality is rooted in the particle form of matter, a particle itself is impermanent. This impermanence can be better understood by appreciating that matter refreshes, constantly; in other words particles must persistently appear and disappear in order to exist for matter to appear to be capable of animation or motion. Consequently, it can be deduced that to have the ability to move matter or a particle from which matter is constructed must have a third property that is currently unaccounted for in modern physics and this is the be ability to “refresh”. To “refresh” refers to the ability to disappear and reappear; a property of matter currently unaccounted for in modern science, but that inferences show may occur. At this stage we have leaped past Einstein's model of the universe. We are able to begin to understand how we live is a universe without conventional distance, and therefore without conventional Time. This makes it easy to understand how and why the phenomenon of both quantum tunneling and quantum entanglement occur.

When we see an object moving, no matter how fast, it is in fact never in motion. If the image is closely observed, like a single frame in a movie projector's real, it is in fact completely still or frozen in place. In order to appear to move each frame must be successively removed and replaced by another. The refresh rate of matter entails that matter exists and ceases to exist so rapidly it is difficult to say which state it occupies leading to a paradox, such as that observed in Schrödinger’s Cat Experiment; it is dead and alive, standing still and moving, existing and ceasing to exist all of which appear to defy conventional thinking in physics. However, using the flow of logic thus far we can dismiss the top view concept that a particle is a wave since we know distance is a construction designed specifically for perception or put simply, it is experiential and conclude that matter always is and fundamentally remains a particle which is why when observed more directly and scrutinized closely matter will tend to appear as a particle, while its wave properties are induced by the process of refreshing these static particles or “stills”. Wiki (2010) explains that “Wave–particle duality postulates that all matter exhibits both wave and particle properties. A central concept of quantum mechanics, this duality addresses the inability of classical concepts like "particle" and "wave" to fully describe the behaviour of quantum-scale objects. Standard interpretations of quantum mechanics explain this ostensible paradox as a fundamental property of the Universe, while alternative interpretations explain the duality as an emergent, second-order consequence of various limitations of the observer. This treatment focuses on explaining the behaviour from the perspective of the widely used Copenhagen interpretation, in which wave–particle duality is one aspect of the concept of complementarity, that a phenomenon can be viewed in one way or in another, but not both simultaneously.”[7] Clearly, as we may note here, there is no duality; a particle is never really a wave in the same way the stills on frames of a projector are never actually moving, they always remain static on each frame and consequently remain in “particle” form therefore the Copenhagen interpretation may be a little misleading.

With physics on the other hand the mistakes in fundamental theory feed on one another and therefore emerge one after the other like whack-a-mole, no sooner is a solution found for one another raises its head.

Einstein and Misconceptions about the Speed of Light

It is my belief that we are entering an era of science in which the public will no longer be able to take seriously a highly qualified physicist from a reputable institution who believes the speed of light is both an invariable constant and unsurpasable limit. They must now be viewed as relics who are being eclipsed by the evolution of the paradigm that defines the very foundations of knowledge in a subject area in which they were once experts who must begin to evolve and advance to remain of any relevance to mankind's future.

Dualities in physics are often symptoms of models that are flawed and therefore do not concisely explain phenomena therefore they allow contradictory statements or theories ascribed to "relativity" to co-exist and equations are tailored to suite these weird juxtapositions. The measurement problem is one of these, but we have used a refresh rate to concisely explain how a wave and a particle co-exist. A refresh rate can be used to explain the relationship between matter (-) and anti-matter (+) and how the two states co-exist by refreshing from one state to the other (.i.e. the same matter is altering states between electron and positron). Matter and anti-matter is very likely to be the same matter alternating between positive and negative charges, its either in one state or the other which explains why it does not explode spontaneously. If alternating charges back and forth between negative and positive charges in this way occurs during each refresh then it explains why anti-matter can be present but unseen or intangible making matter appear dominant. This switching back forth may be fundamental to how matter exists.

The Measurement Problem Explained: A Refresh Rate for Matter & Analytics in Economics

Economics faces the same human constraints in logic that are found in physics in that what is observed and interpreted analytically may not be what occurs. For instance it is possible to theorize a scientific basis for the inexistence of time; an attempt shall be made here to explain this view. To make the explanation simpler or clearer let us begin with a very simple approach such as this; a cartoonist or animator drawing a car can flip pages with drawings to show a car travelling at 100km/h, however, in reality the image on each page is standing still and has no velocity; consequently, similarly to begin to understand forces like gravity scientists may have to learn to accept the non-existence of durational time and rationalize this in physics.

This flip book animation demonstrates how matter on every page is standing

still [is a particle], that is, in the Bose-Einstein Condensate. However, when the artist begins to flip the

pages [refresh matter] or heat back up the car miraculously begins to move [it becomes a wave] appears as sodium

or the sodium atom effectively solving the measurement problem.

If Einstein explains the Bose-Einstein Condensate as a matter in its fundamental wave form this is misleading. The problem with professors continuing to teach students of physics that the fundamental state of matter is a wave not a particle is that this misinterpretation then makes it impossible to explain gravity because the particle rather than the wave function or form is the conduit for mass or gravity. As we explain later this mass is not contained in the particle itself, but consists of true Space acting on the particle. It is also important remember that for physicists to see and be able to understand a "particle" is a top view or Einsteinian description of matter. We know that from the side view there is no particle. There is only some form of side-view code, and when the code is observed from the top view it appears as and behaves like a physical particle. Understanding this procedure explains why and how a wave cannot be more fundamental than a particle or object. Technically, this means physical matter does not exist as is commonly thought (from the top view) and all the physical or sensory reality associated with matter (even mass-energy equivalence in nuclear energy) is created by the interaction of electromagnetism and gravity, rather than the matter itself which consists of code. In other words, mass, texture and all "physical" attributes associated with matter do not come from matter itself but are simply "attributes" added to the code, contextually by the interaction of gravity (refresh rate) and electromagnetism, without which matter could not be interacted with .i.e. it would be incapable of being physical (solid) neither could it have mass - even something as simple as picking up a coffee mug would be impossible because it would have no physical attributes or properties. However, if magnetic fields can in turn act on the particle, it then becomes possible to manipulate gravity indirectly using the plasma's mass.

Proof that the fundamental state of matter is a particle, not a wave, was empirically proven by the Bose-Einstein Condensate achieved using lasers to cool sodium atoms down to 177 nano Kelvin. The result is a plasma that on observation is easy for scientists to mistake for a wave form. It is in fact atoms being observed as a fine cloud of fundamental particles or a particle soup. (It appears to align only because the particles appear uniform and cascade only because they are aligned under the influence of a magnetic field) in basically the structural pattern, thumbprint or blueprint of sodium. Hypothetically, interfering with this field would alter its "field pattern" or blueprint causing the plasma to change from sodium into some other substance when it condenses (heats back up). Technically using lasers to melt substances by freezing them to 177 nano Kelvin and below should allow scientists to create any substance or form of matter by altering the pattern of plasma while still in its melted state and let it condense into some other form of matter (performing what would be considered the equivalent of the Philosopher's Stone in Alchemy).

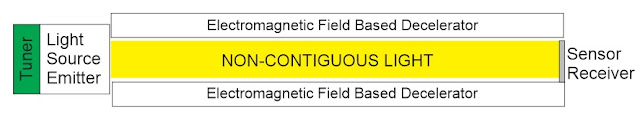

If all matter is in fact created from light as a fundamental particle infinitesimally smaller than an electron that can be observed in the Bose-Einstein condensate then it may be necessary to revisit the structure and nature of light itself. If magnetic fields are dismissed using a "fields do not exist approach" it may be necessary to consider that light is made up of a uniform particulate that propagates through transistors (Space or St) not fields (Se or Geometric Space) as is observed by the naked eye and experienced. If light is controlled by transistors not electromagnetic fields it means that it is inherently, non-contiguous and does not move from one place to another, but uses a stimulation process that only makes it appear to travel. Light can behave as both a wave and particle. However, we have seen that light waves can exist without the need for magnetic fields by being non-contiguous and controlled instead by using entanglement and made to produce "light waves" moving at 299,792,458 m/s when in fact light does not travel this distance or move at all (see the animation below). By generating light waves these transistors are not only able to make light appear to travel, but also generate depth, width and motion (where motion is mistaken for Time in Se-Te). For this process to be understood it must be accepted that light in general consists of a fine uniform particulate as observed in the BE condensate. This particulate is turned into waves using a similar process to the way electrons are used in semiconductors to process information.

Furthermore, this plasma state and the ability to influence it using magnetic fields to create a particle beam possibly offers one of the few plausible methods for using electromagnetism to indirectly control gravity by manipulating the plasma. This works because suspending motion in this manner is an artificial way of slowing down time [motion] (another safer route to the Hutchinson effect), which gives physicists access to the manipulation of the mass of the plasma through electromagnetic fields.

The reason why this aspect of physics has not been done

and the technology not made any progress thus far is because, once again, the

observation has been misinterpreted, in this case by calling what is observed

in the behaviour of the cloud or plasma waves instead of fundamental particles.

In other words the method and technology for controlling gravity and

potentially building any substance using fundamental particles as building

blocks in manipulated fields has already been developed. The challenge with

this type of technology of course will always be the method used to suspend motion

which requires extremely low temperatures.

Proof that the fundamental state of matter is a particle, not a wave, was empirically proven by the Bose-Einstein Condensate achieved using lasers to cool sodium atoms down to 177 nano Kelvin. The result is a plasma that on observation is easy for scientists to mistake for a wave form. It is in fact atoms being observed as a fine cloud of fundamental particles or a particle soup. (It appears to align only because the particles appear uniform and cascade only because they are aligned under the influence of a magnetic field) in basically the structural pattern, thumbprint or blueprint of sodium. Hypothetically, interfering with this field would alter its "field pattern" or blueprint causing the plasma to change from sodium into some other substance when it condenses (heats back up). Technically using lasers to melt substances by freezing them to 177 nano Kelvin and below should allow scientists to create any substance or form of matter by altering the pattern of plasma while still in its melted state and let it condense into some other form of matter (performing what would be considered the equivalent of the Philosopher's Stone in Alchemy).

Britannica describes Bose-Einstein condensate (BEC) as "a state of matter in which separate atoms or subatomic particles, cooled to near absolute zero (0 K, − 273.15 °C, or − 459.67 °F; K = kelvin), coalesce into a single quantum mechanical entity—that is, one that can be described by a wave function—on a near-macroscopic scale." Firstly its not a single quantum entity, it consists of a cloud of fundamental particles from which the atom is built, secondly the wave function is created by a magnetic field (it is not evidence of wave particle duality, in fact it proves the opposite which is that fundamentally matter is a particle not a wave, i.e. the state of matter when the projector's wheel stops spinning (when time stops) the object in each frame is a particle, shown in the above image captured of Bose-Einstein condensate. Physicists to this day are still getting this wrong. In all likelihood if temperatures fell further the electromagnetic field itself would collapse leaving only the plasma without a wave function or pattern that forms the blueprint for sodium.

| The atoms when viewed normally in Se-Te (Top View) are viewed as physical objects objects. However, when temperature is sufficiently lowered toward absolute zero using lasers the view changes to St-Tt (Side View) and the particles that make up the atoms become visible. They are uniform. Each particle has mass and is entangled with other particles in the cloud. Though the cloud appears as a single indivisible substance it should not be mistaken for this as it consists of fine particles. Entanglement furnishes each particle with information on how it should behave or move and this makes energy and matter programmable. The entangled particles moving in harmony create the particle cloud or Bose-Einstein condensate. The code, algorithms and other information being fed to the particles through entanglement, that determine how they behave, is what determines the substance they become. The manner in which the particles move in unison make them appear as though they are a wave or are under the influence of a magnetic field. Any fields should be considered a by-product of quantum entanglement. Quantum mechanics programs the particles, is responsible for communication and for harmonizing the fine particle cloud or Bose-Einstein condensate. Fields are not the origin or source of harmonics. The fundamental state of matter is an individual like particle, not a wave. Although waves and fields are useful for explaining what is being observed, it may be more accurate to state that the waves are not being created by fields, but by quantum mechanics. Each uniform particle, at this stage of analysis, can be considered part of the process by which a bit of information is created and processed. |

| The image above is the more accurate emulation of an atom. The element can be referred as a "Tron". It only shows one element in orbit, for simplicity, to make it easier to understand. Though there is only one Tron in the diagram, it remains plausible there can be a number of Trons and orbits in an atom, each dipping into and out of the nucleus at the same T3 neutron nexus. There can be Trons in the same and different orbits moving as electrons while some are moving as protons in the nucleus and they exchange places as they travel into and out of the nucleus switching polarity as they do so. When the Tron is at T1 it is described as having a negative charge and is therefore called an "elec-tron". When its orbit reaches T3 it reverses polarity. During reversal or the process of switching polarity it has no charge may be referred to as a "neu-tron", when it is at T2 it has reversed polarity and is described as being a "posi-tron". It cycles back into orbit to T1 repeating the process. It moves under its own internal power directed by quantum mechanics. |

|

MRI scan of an atom. The dimple in the atom which can vary in depth and width corresponds with "Trons"dipping into and out of T1-T3-T2 orbits. The scan is 3 dimensional and it fits what is predicted by T1-T3-T2. . see Magnetic resonance imaging of single atoms on a surface. |

When it comes to quantum mechanics the purpose of energy bands becomes clearer using the new construct. The diagram above shows that the orbital energy bands are designed to repel any Trons in orbit. Electrons that are negatively charged will be compelled to exit a negatively charged energy band in external orbit at C. When in external orbit they will therefore be repelled until they find the nearest exit, which is the positively charged energy band or orbit in the nucleus. However, when Trons enter the nucleus they become positively charged. A consequence of this that the positively charged energy band in the nucleus pushes them away and they once again have to look for the nearest exit which is back into external orbit at C. However, when they enter external orbit they become negatively charged electrons and are once again pushed along until they find an exit. This continuous cycle inevitably creates a "motor" or the energy observed and referred to as atomic energy. The fact that this energy can be harvested in a reactor entails that this design not only creates a "motor", but also in the process creates a "dynamo" or "generator", basically a reactor. The energy bands act as power lines feeding Trons with momentum that pushes them along and keeps them circling. Trons dipping into and out of the nucleus does not give off radiation or photons because they are riding energy bands into and out . the nucleus at constant velocity. When the atom is observed the process of dipping into the nucleus is not obvious and it appears as though the electrons simply maintain a round or circular orbit, which is the classical manner in which they are depicted. Understandably the Trons are moving at such high velocity that when when observed it will appear as though they are standing still and have a permanent residence in the nucleus and in orbit when in fact they are constantly on the move throughout these locations making them fundamentally impermanent. The Tron likely has a standard mass. The only reason why the proton is heavier than the electron is that when the Tron circles into the nucleus its rate of acceleration obviously increases due to travelling a shorter circumference and this makes its mass greater than when it is in outer orbit as an electron. Importantly, it explains why Trons appear to never run out of energy and collapse into the nucleus, as is expected for a Hydrogen atom for example. Rather than collapse, they intentionally dip or dive into the nucleus, however, this design entails the energy bands act as accelerators. It also explains why neutrons are difficult to find, Trons become neutral for the shortest duration. They are riding energy bands where they circle into and out of the nucleus. These energy bands (from which quantum mechanics gets it name) by repelling Trons both in the nucleus and in external orbit keep feeding them with energy or momentum with which they amplify mass at T4 and sustain their orbital movement. The energy bands in quantum mechanics are simply "fields". This complex explanation is necessary when the atom is viewed to have electrical charges and magnetic fields. However, all this complexity can be dropped if the Trons, which at this stage can be compared to graphical "sprites" are simply programmed to move in the manner observed, and therefore orbit under their own power, which may very well be the case. In addition to this the path they take may be determined by underlying code, in which case the energy bands or "quantums" of quantum mechanics, like "fields", do not exist, as they are nothing more than by-products of processing taking place hidden below the size and scale of electrons where the BE condensate or fine particulate constructs an atom - see the animation below. |

The Collision Drive: harnessing gravitational force

By advancing the understanding of how gravity works from Newton to Einstein to autonomous matter using the "fields do not exist" method applied from brute force analysis it is possible to engineer an apparatus and method that emulates how the Gravitron in atoms shown in diagrams above as T4 generates gravitational force. This apparatus and method is called the Collision Drive. The Gravitron is the Graviton or "Higgs Boson" and the mechanism replicates and generates a gravitational force at T4 that can be pointed in any direction to create propulsive force.

The Collision Drive uses Mechanical Engineering to emulate the

force at T4 creating what can be referred to as entry level

or tier 1 gravitational force

When analysing the mechanics of an atom it is important not to be mislead by terminology. A certain degree of flexibility is required. For instance, descriptions such as negative and positive charges and energy bands or a neutron can be useful when trying to explain how particles behave. However, a flow of electrons may be, in terms of mechanics, no more complicated than the flow of water in a downward sloping river. There are implications when it is believed that negative and positive charges actually exist when in fact these are just different directions, types or stages of acceleration or that there are "permanent" electrons, neutrons, protons and gravitons in an atom when in fact these are just Trons momentarily in different parts of an atom or that orbits are circular when in fact they are curved into and out of the nucleus. To use analytical brute-force to unlock the secrets behind these problems we can then say "electrons, protons, neutrons and gravitons" do not exist in order to force a different explanation, approach or dynamic to the analysis, when what we are in fact saying is all of these components of an atom are created by Trons. Just like the "fields do not exist" method of analysis it can be said electricity and electrical charges "do not exist" because they are just aspects of the mechanical nature rather than the "electrical" method in which an atom is thought to operate. They therefore have to be handled dexterously to avoid interpretations being limited by how any terminology is used, framed, defined and applied as the very process of defining can hinder the capacity to make deeper and more accurate inferences. Therefore, we need to be careful not to lose the capacity to think and solve problems outside the definition when scientific terminologies are created.

If all matter is in fact created from light as a fundamental particle infinitesimally smaller than an electron that can be observed in the Bose-Einstein condensate then it may be necessary to revisit the structure and nature of light itself. If magnetic fields are dismissed using a "fields do not exist approach" it may be necessary to consider that light is made up of a uniform particulate that propagates through transistors (Space or St) not fields (Se or Geometric Space) as is observed by the naked eye and experienced. If light is controlled by transistors not electromagnetic fields it means that it is inherently, non-contiguous and does not move from one place to another, but uses a stimulation process that only makes it appear to travel. Light can behave as both a wave and particle. However, we have seen that light waves can exist without the need for magnetic fields by being non-contiguous and controlled instead by using entanglement and made to produce "light waves" moving at 299,792,458 m/s when in fact light does not travel this distance or move at all (see the animation below). By generating light waves these transistors are not only able to make light appear to travel, but also generate depth, width and motion (where motion is mistaken for Time in Se-Te). For this process to be understood it must be accepted that light in general consists of a fine uniform particulate as observed in the BE condensate. This particulate is turned into waves using a similar process to the way electrons are used in semiconductors to process information.

In this great video, Dianna Leilani Cowern (Physics Girl: follow the link to her

awesome YouTube channel) uses an experiment

with a tone generator, mechanical vibrator, plate and sand to

create patterns from sand. The sand can be compared to

the smeared atom or fine particulate in the BEC. The black plate

represents the medium for entanglement connecting all the particles together.